Introduction

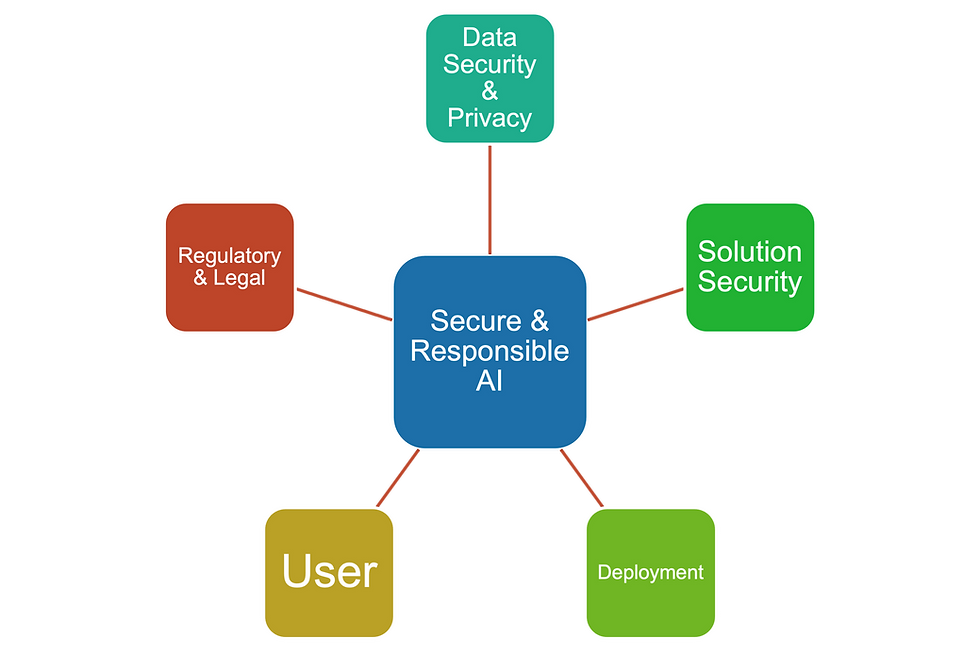

Generative AI has emerged as a powerful tool for enterprises to analyze large amounts of unstructured data and unlock information and insights that can transform an enterprise. However, allowing unfettered access to extremely sensitive enterprise data without good security and privacy guardrails can be a double-edged sword and result in extremely negative outcomes for the company, it’s employees as well as external stakeholders. In this post we discuss what security, privacy and regulatory considerations an enterprise must keep in mind while deploying Generative AI in a safe and responsible manner.

Data Security & Privacy

The ability of an organization to collect, analyze and interpret data decides how effective and successful it can be. Generative AI is very data intensive and deploying such a solution across the enterprise requires that companies implement stringent security and privacy guidelines.

Minimization: Data minimization is defined by privacy laws and principles as the requirement that personal information is adequate, relevant, and limited to what is necessary in relation to the purposes for which (it is) processed. In other words, collect only that data which is absolutely necessary - and nothing more

Anonymization: Remove sensitive information such as personal details from training data

Encryption: All training data used by Generative AI must be encrypted at rest as well as in flight to prevent information leakage and unauthorized access

Role-Based Access Control: Implementing strong role-based security and access control is mandatory when rolling out GenAI solutions. User needs to have rights to access the data used for answering their questions (e.g. sensitive personal information on other employees, corporate secrets, intellectual property, etc.)

Data Retention: Any personal information used for GenAI training should only be retained for as long as it is required

Consent: Obtain explicit user permission before collecting data as stipulated by prevailing regulations.

Data Sourcing and Intellectual Property: Data used for Model training needs to be checked for Intellectual property rights as well as where it comes from. Stolen IP used for model training can be just as problematic as intentionally poisoned data

Solution Security

There are many ways in which Machine Learning and AI models can be abused, and security must be top of mind when designing solutions for the enterprise.

Secure Software Supply Chain: This is the process of securing components, activities and practices involved in creating the solution. NIST (among others) have detailed recommendations and guidelines to help companies adopt

Adversarial Attacks: These are a type of cyber security attack that involves manipulating machine learning models with carefully crafted input data. They take advantage of the vulnerabilities in the models' decision-making processes to cause misclassifications or faulty outputs.

Model Inversion: In a model inversion attack, an attacker uses the model's predictions and some auxiliary information to reconstruct the original input data. The attacker may also train a surrogate model to imitate the original model.

Model Testing and Validation: Testing and validation is an essential part of any solution development process, but especially important in AI because of the additional risks involved. Models need to be evaluated for performance, accuracy and bias.

Deployment

Once deployed, Generative AI solutions need to be constantly monitored and audited to ensure transparency and gain user trust.

Infrastructure Security: DHS Cybersecurity and Infrastructure Security Agency (CISA) and UK’s NCSC have released roadmaps for AI security that cover the life cycle of AI systems including secure deployment. They emphasize safeguarding infrastructure and models against compromise, establishing processes for incident management, and adopting principles of responsible release

Incident Response Plan: Developing incident management procedures is very important for deployment security. This involves being prepared to respond to and recover from security incidents that may affect the deployed AI systems

Monitoring and Auditing: Implementing mechanisms for monitoring of the AI system's behavior and performance in real time involves tracking various aspects such as data quality, model performance, and system outputs to detect any anomalies or deviations from expected behavior

User Considerations

Privacy and security concerns are really all about the user.

Transparency: Transparency is essential for building trust in AI solutions, particularly in business applications that impact operations and strategic decision-making. Being transparent means letting users know how the AI model arrived at a certain answer, including what data was used and the confidence level.

Fairness / Bias Auditing: Bias reporting is critical for detecting and addressing bias in the training data which is essential for fairness and reliability of AI systems. Regular audits are important, but equally important is the regular training of AI engineers who are responsible for the training data, to identify their personal biases and help them identify potential issues in datasets

Reporting: Users need to have the ability to report any bias they perceive, and provide feedback to improve and address problems.

Legal & Regulatory Considerations

There are existing laws and regulations that apply to the use of AI solutions and more are coming. Some of the existing regulations are quite broad, others are more narrow. The new laws addressing AI specifically have very technical definitions and it will take a team effort to understand an AI solution’s use cases. While regulatory activity is happening around the world, a few highlights include:

Draft EU AI Act: Europe is looking to be a leader on AI system regulation, as it gets closer to the final draft of the Draft EU AI Act. The draft EU AI Act takes a risk-based approach, with some AI systems banned completely and high-risk AI systems subject to strict requirements, including certification before release; testing; regular auditing. monitoring on privacy, security, and accuracy; technical documentation; record keeping (logs), transparency (notice to users and explanations about what it might not be so good at); human oversight; and failsafe measures. Limited risk AI systems would be subject to transparency requirements (to let people know they are in use).

U.S. Federal Laws: In the U.S. there are laws that apply now to AI, such as FTC Act Section 5 which prohibits unfair or deceptive practices, and laws related to nondiscrimination in areas like credit & employment decisions. There are also discussions about new regulations and proposals, like the White House AI “Bill of Rights” and AI Executive Order.

State and Local Laws: State privacy laws are addressing AI (sometimes called “automated decision making”) and California has recently released draft rules related to its sweeping privacy law, the California Consumer Privacy Act (CCPA) that related to the use of AI. There are also laws that are emerging in some states that address very specific use cases of AI, such as the New York City Local Law 144 related to automated employment decision tools.

All these emerging guidelines illustrate how important AI Security is in today’s world. At Samvid, we strongly believe that implementing strong controls with a verification layer that protects privacy, checks responses for bias, and provides transparency and traceability is not only prudent business, but also mandatory for Enterprise adoption.

Comments